Getting Started¶

Now we will look at four – probably the four most frequent – tasks that an end-user would be likely to use the tool for.

Loading Data to Database¶

First we have to initialize the database. This is done with the script init_db.py.

There are a pair of scripts, init_db.py and load_to_db.py, for loading the data to the database. First, make sure the data is in the format we distributed it in. For reference, this means:

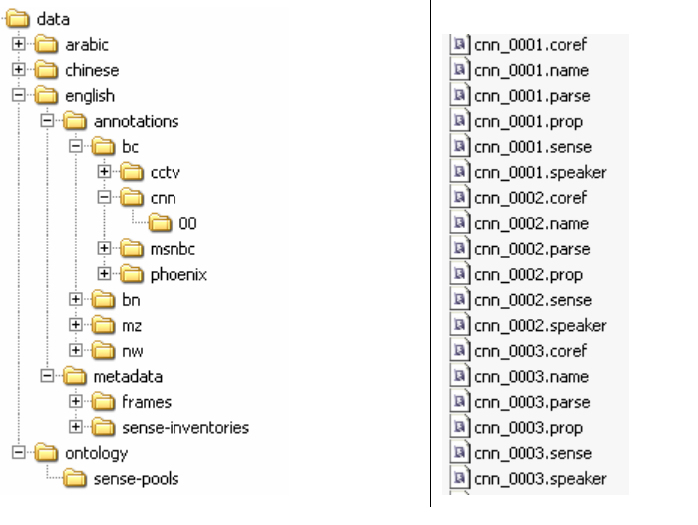

.../data/<lang>/annotations/<genre>/<source>/<section>/<file>

Now we initialize the database. This creates the relevant tables:

$ python on/tools/init_db.py --init my_db my_server my_name ontonotes-release-4.0/

Note that d_loc doesn’t end in “/data/”.

Once the tables are created, we can load frames or sense inventories to the data for languages we desire. Load the frames before the sense inventories:

$ python on/tools/init_db.py --frames=english my_db my_server my_name \

ontonotes-release-4.0/

$ python on/tools/init_db.py --sense-inventories=english my_db my_server my_name \

ontonotes-release-4.0/

Next, create a configuration file patterned after the example below: (shipped as tools/config.example)

###

### This is a configuration file in the format expected by

### ConfigParser. Any line beginning with a hash mark (#) is a

### comment. Lines beginning with three hash markes are used for

### general commentary, lines with only one are example configuration

### settings

###

### You must set corpus.data_in or fill out the section db

### and will likely want to change other settings

###

### Note that fields that take lists (for example banks) want space

### separated elements. For example:

###

### banks: parse prop coref sense

###

### Also note that all settings in the configuration file may be

### overridden on the command line if the tool is using

### on.common.util.load_options as all the tools here do. The syntax

### for that is:

###

### python some_tool -c some_config Section.Option=Value

###

### For example:

###

### python load_to_db.py -c config.example corpus.data_in=/corpus/data

###

### Because we use spaces to separate elements of lists, you may need

### to put argument values in quotes:

###

### python load_to_db.py -c config.example corpus.banks="name prop"

###

### In general, each section here is used by one api function.

###

[corpus]

###### This section is used by on.ontonotes.__init__ ######

###

###

### data_in: Where to look for the data

###

### this should be a path ending in 'data/'; the root of the ontonotes

### file system heirarchy.

data_in: /path/to/the/data/

### If you set data_in to a specific parse file, as so:

#

# data_in: /path/to/the/data/english/annotations/nw/wsj/00/wsj_0020.parse

#

### Then only that document will be loaded and you can ignore the next

### paragraph on the load variable and leave it unset

### What to load:

###

### There is a heirarchy: lang / genre / source. We specify what to

### load in the form lang-genre-source, with the final term optional.

###

### The example configuration below loads only the wsj (which is in

### english.nw):

load: english-nw-wsj

### Note that specific sources are optional. To load all the chinese

### broadcast news data, you would set load to:

#

# load: chinese-bn

#

### One can also load multiple sections, for example if one wants to

### work with english and chinese broadcast communications data (half

### of which is parallel):

#

# load: english-bc chinese-bc

#

### This a flexible configuration system, but if it is not

### sufficiently flexible, one option would be to look at the source

### code for on.ontonotes.from_files() to see how to manually and

### precisely select what data is loaded.

###

### One can also load only some individual documents. The

### configuration values that control this are:

###

### suffix, and

### prefix

###

### The default, if none of these are set, is to load all files

### for each loaded source regardless of their four digit id. This is

### equivalent to the setting either of them to nothing:

#

# prefix:

# suffix:

#

###

### Set them to space separated lists of prefixes and suffixes:

#

# suffix: 6 7 8

# prefix: 00 01 02

#

### The above settings would load all files whose id ends in 6, 7, or

### 8 and whose id starts with 00, 01, or 02.

###

### We can also set the granularity of loading. A subcorpus is an

### arbitrary collection of documents, that by default will be all the

### documents for one source. If we want to have finer grained

### divisions, we can. This means that the code:

###

### # config has corpus.load=english-nw

### a_ontonotes = on.ontonotes.from_files(config)

### print len(a_ontonotes)

###

### Would produce different output for each granularity setting. If

### granularity=source, english-nw has both wsj and xinhua, so the

### ontonotes object would contain two subcorpus instances and it

### would print "1". For granularity=section, there are 25 sections

### in the wsj and 4 in xinhua, so we would have each of these as a

### subcorpus instance and print "29". For granularity=file, there

### are a total of 922 documents in all those sections, so it would

### create 922 subcorpus objects each containing only a single

### document.

###

granularity: source

# granularity: section

# granularity: file

###

### Once we've selected which documents to put in our subcorpus with

### on.ontonotes.from_files, we need to decide which banks to load.

### That is, do we want to load the data in the .prop files? What

### about the .coref files?

###

banks: parse coref sense name parallel prop speaker

### You need not load all the banks:

#

# banks: parse coref sense

# banks: parse

#

###

### The name and sense data included in this distribution is all word

### indexed. If you want to process different data with different

### indexing (indexing that counts traces) then set these varibles

### apropriately.

wsd-indexing: word # token | nword_vtoken | notoken_vword

name-indexing: word # token

###

### Normally, when you load senses or propositions, the metadata that

### tells you how to interpret them is also loaded. If you don't wish

### to load these, generally because they are slow to load, you can

### say so here. A value of "senses" means "don't load the sense

### inventories" and a value of "frames" means "don't load the frame

### files". One can specify both.

###

### The default value is equivalent to "corpus.ignore_inventories="

### and results in loading both of them as needed.

###

#

# ignore-inventories: senses frames

# [db]

###### This section is used by on.ontonotes.db_cursor as well ######

###### as other functions that call db_cursor() such as ######

###### load_banks ######

###

### Configuration information for the db

#

# db: ontonotes_v3

# host: your-mysql-host

# db-user: your-mysql-user

#

Be sure to include a db section so the script has information about what database to load to and where to find it.

Now we can run tools.load_to_db to actually complete the loading (note that the exact output will depend on the options you chose in the configuration file. Here we’re loading parses and word sense data for the Wall Street Journal sections 02 and 03):

$ python on/tools/load_to_db.py --config=load_to_db.conf

Loading english

nw

wsj

....[...].... found 200 files starting with any of ['02', '03'] in the \

- subcorpus all@wsj@nw@en@on

- Initializing DB... writing the type tables to db................done. Loading all@wsj@nw@en@on reading the treebank .....[...]............. 4646 trees in the treebank reading the sense bank .......[...]......... reading the sense inventory files ..[...]... 2164 aligning and enriching treebank with senses .........[...]..... writing document bank to db.....[...].......done. writing treebank to db..........[...].......done. writing sense bank to db........[...].......done.

At this point the database is populated and usable.

The source code of tools.load_to_db is a good example of how to use several aspects of the API.

Loading and Dumping Data from Database¶

Similarly, you can dump data from the database with the script tools/files_from_db.py. Again create a configuration file based on config.example, but this time add to the configuration:

[FilesFromDb]

out_dir: /path/to/my/output/directory

Then run the code as:

$ python on/tools/files_from_db.py --config files_from_db.conf

If you for some reason wanted to send files to a different output directory than specified in the config file, you could override it on the command line:

$ python on/tools/files_from_db.py --config files_from_db.conf \

FilesFromDb.out_dir=/path/to/another/output/directory

This form of command line overriding of configuration variables can be quite useful and works for all variables. All scripts use on.common.util.load_options() to parse command line arguments and load their config file. The load_options function calls on.common.util.parse_cfg_args() to deal with command line config overrides.